Docker docs:

Docker routes container traffic in the nat table, which means that packets are diverted before it reaches the INPUT and OUTPUT chains that ufw uses. Packets are routed before the firewall rules can be applied, effectively ignoring your firewall configuration.

This was a large part of the reason I switched to rootless podman for everything

Explicitly binding certain ports to the container has a similar effect, no?

I still need to allow the ports in my firewall when using podman, even when I bind to 0.0.0.0.

Also when using a rootfull Podman socket?

When running as root, I did not need to add the firewall rule.

I haven’t tried rootful since I haven’t had issues with rootless. I’ll have to check on that and get back to you.

It’s better than nothing but I hate the additional logs that came from it constantly fighting firewalld.

My problem with podman is the incompatibility with portainer :(

Any recommendations?

cockpit has a podman/container extension you might like.

It’s okay for simple things, but too simple for anything beyond that, IMO. One important issue is that unlike with Portainer you can’t edit the container in any way without deleting it and configuring it again, which is quite annoying if you just want to change 1 environment variable (GH Issue). Perhaps they will add a quadlet config tool to cockpit sometime in the future.

i mean, you can just redeploy the container with the updated variable. thats kinda how they work.

CLI and Quadlet? /s but seriously, that’s what I use lol

Quadlets are so nice.

I assume portainer communicates via the docker socket? If so, couldn’t you just point portainer to the podman socket?

My impression from a recent crash course on Docker is that it got popular because it allows script kiddies to spin up services very fast without knowing how they work.

OWASP was like “you can follow these thirty steps to make Docker secure, or just run Podman instead.” https://cheatsheetseries.owasp.org/cheatsheets/Docker_Security_Cheat_Sheet.html

My impression from a recent crash course on Docker is that it got popular because it allows script kiddies to spin up services very fast without knowing how they work.

That’s only a side effect. It mainly got popular because it is very easy for developers to ship a single image that just works instead of packaging for various different operating systems with users reporting issues that cannot be reproduced.

No it’s popular because it allows people/companies to run things without needing to deal with updates and dependencies manually

I dont really understand the problem with that?

Everyone is a script kiddy outside of their specific domain.

I may know loads about python but nothing about database management or proxies or Linux. If docker can abstract a lot of the complexities away and present a unified way you configure and manage them, where’s the bad?

That is definitely one of the crowds but there are also people like me that just are sick and tired of dealing with python, node, ruby depends. The install process for services has only continued to become increasingly more convoluted over the years. And then you show me an option where I can literally just slap down a compose.yml and hit “docker compose up - d” and be done? Fuck yeah I’m using that

Another take: Why should I care about dependency hell if I can just spin up the same service on the same machine without needing an additional VM and with minimal configuration changes.

This only happens if you essentially tell docker “I want this app to listen on 0.0.0.0:80”

If you don’t do that, then it doesn’t punch a hole through UFW either.

If I had a nickel for every database I’ve lost because I let docker broadcast its port on 0.0.0.0 I’d have about 35¢

How though? A database in Docker generally doesn’t need any exposed ports, which means no ports open in UFW either.

I exposed them because I used the container for local development too. I just kept reseeding every time it got hacked before I figured I should actually look into security.

For local access you can use

127.0.0.1:80:80and it won’t put a hole in your firewall.Or if your database is access by another docker container, just put them on the same docker network and access via container name, and you don’t need any port mapping at all.

Yeah, I know that now lol, but good idea to spell it out. So what Docker does, which is so confusing when you first discover the behaviour, is it will bind your ports automatically to

0.0.0.0if all you specify is27017:27017as you port (without an IP address prefixing). AKA what the meme is about.

Where are you working that your local machine is regularly exposed to malicious traffic?

My use case was run a mongodb container on my local, while I run my FE+BE with fast live-reloading outside of a container. Then package it all up in services for docker compose on the remote.

Ok… but that doesn’t answer my question. Where are you physically when you’re working on this that people are attacking exposed ports? I’m either at home or in the office, and in either case there’s an external firewall between me and any assholes who want to exploit exposed ports. Are your roommates or coworkers those kinds of assholes? Or are you sitting in a coffee shop or something?

This was on a VPS (remote) where I didn’t realise Docker was even capable of punching through UFW. I assumed (incorrectly) that if a port wasn’t reversed proxied in my nginx config, then it would remain on localhost only.

Just run

docker run -p 27017:27017 mongo:lateston a VPS and check the default collections after a few hours and you’ll likely find they’re replaced with a ransom message.Ah, when you said local I assumed you meant your physical device

I DIDNT KNOW THAT! WOW, this puts “not to use network_mode: host” another level.

network: hostgives the container basically full access to any port it wants. But even with other network modes you need to be careful, as any-p <external port>:<container port>creates the appropriate firewall rule automatically.I just use caddy and don’t use any port rules on my containers. But maybe that’s also problematic.

Actually I believe host networking would be the one case where this isn’t an issue. Docker isn’t adding iptables rules to do NAT masquerading because there is no IP forwarding being done.

When you tell docker to expose a port you can tell it to bind to loopback and this isn’t an issue.

Nat is not security.

Keep that in mind.

It’s just a crutch ipv4 has to use because it’s not as powerful as the almighty ipv6

deleted by creator

Or maybe it should be easy to configure correctly?

instructions unclear, now its hard to use and to configure

This post inspired me to try podman, after it pulled all the images it needed my Proxmox VM died, VM won’t boot cause disk is now full. It’s currently 10pm, tonight’s going to suck.

eh, booting into single user mod should work fine, uninstall podman and init 5

Okay so I’ve done some digging and got my VM to boot up! This is not Podman’s fault, I got lazy setting up Proxmox and never really learned LVM volume storage, while internally on the VM it shows 90Gb used of 325Gb Proxmox is claiming 377Gb is used on the LVM-Thin partition.

I’m backing up my files as we speak, thinking of purging it all and starting over.

Edit: before I do the sacrificial purge This seems promising.

thinking of purging it all and starting over.

Don’t do that. You’ll learn nothing.

So I happened to follow the advice from that Proxmox post, enabled the “Discard” option for the disk and ran

sudo fstrim /within the VM, now the Proxmox LVM-Thin partition is sitting at a comfortable 135Gb out of 377Gb.Think I’m going to use this

fstrimcommand on my main desktop to free up space.I think linux does fstrim oob

It’s been about a day since this issue and now I’ve been keeping a close eye on my local-lvm, it fills fast, like, ridiculously fast and I’ve been having to run

sudo fstrim /inside the VM just to keep it maintained. I’m finding it weird I’m now just noticing this as this server has been running for months!For now I edited my

/etc/bash.bashrcso whenever I ssh in it’ll automatically runsudo fstrim /, there is something I’m likely missing but this works as a temporary solution.

We use Firewalld integration with Docker instead due to issues with UFW. Didn’t face any major issues with it.

I also ended up using firewalld and it mostly worked, although I first had to change some zone configs.

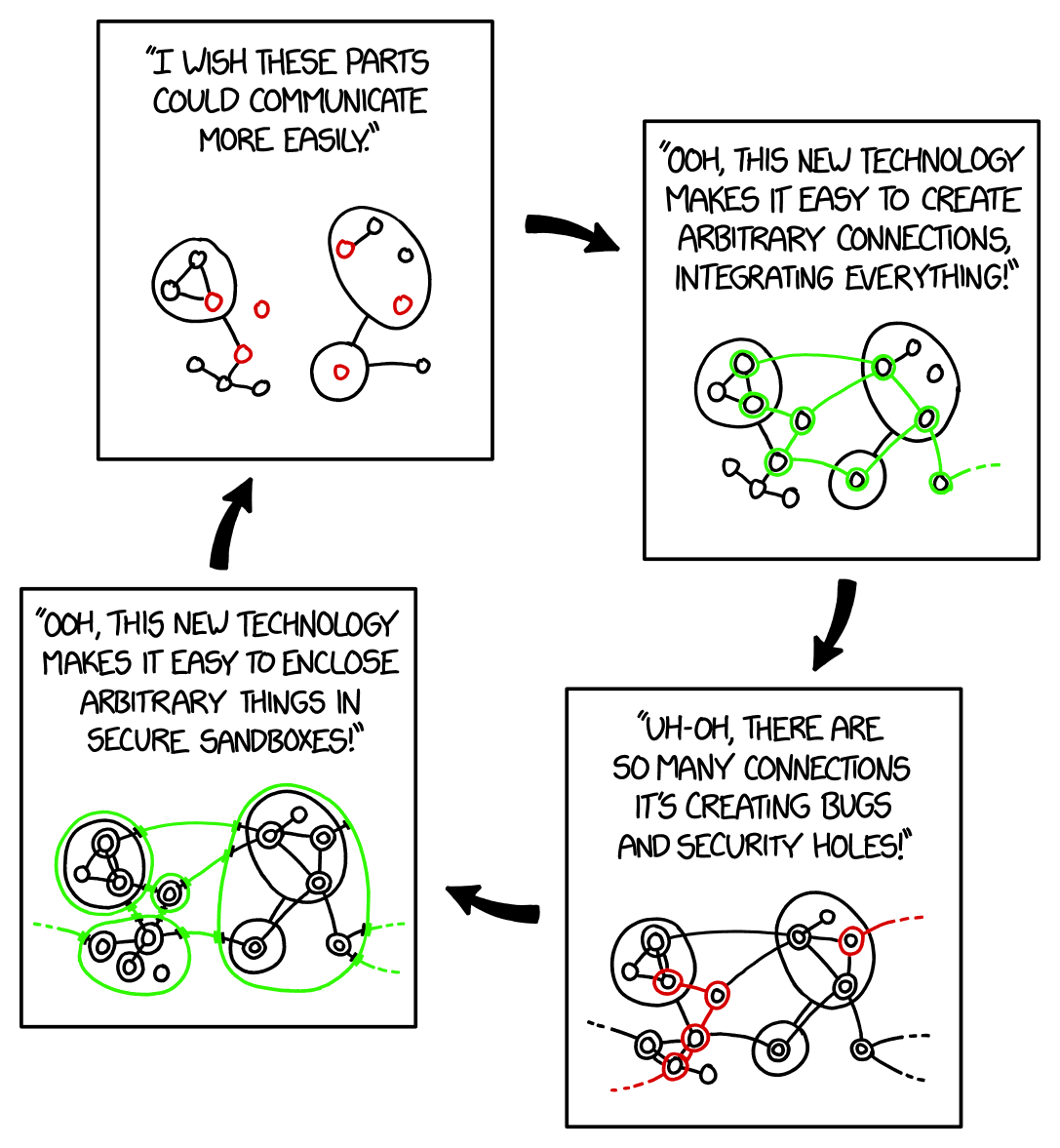

It’s my understanding that docker uses a lot of fuckery and hackery to do what they do. And IME they don’t seem to care if it breaks things.

To be fair, the largest problem here is that it presents itself as the kind of isolation that would respect firewall rules, not that they don’t respect them.

People wouldn’t make the same mistake in NixOS, despite it doing exactly the same.

This is why I hate Docker.

I don’t know how much hackery and fuckery there is with docker specifically. The majority of what docker does was already present in the Linux kernel namespaces, cgroups etc. Docker just made it easier to build and ship the isolated environments between systems.

I mean if you’re hosting anything publicly, you really should have a dedicated firewall

Do you mean a hardware firewall?

Basically yeah, though I didn’t specify hardware because of how often virtualization is done now

The VPS I’m using unfortunately doesn’t offer an external firewall

On windows (coughing)

Somehow I think that’s on ufw not docker. A firewall shouldn’t depend on applications playing by their rules.

ufw just manages iptables rules, if docker overrides those it’s on them IMO

Not really.

Both docker and ufw edit iptables rules.

If you instruct docker to expose a port, it will do so.

If you instruct ufw to block a port, it will only do so if you haven’t explicitly exposed that port in docker.

Its a common gotcha but it’s not really a shortcoming of docker.

Feels weird that an application is allowed to override iptables though. I get that when it’s installed with root everything’s off the table, but still…

Linux lets you do whatever you want and that’s a side effect of it, there’s nothing preventing an app from messing with things it shouldn’t.

If you give it root

there’s nothing preventing an app from messing with things it shouldn’t.

that’s not exactly a linux specialty

It is decidedly weird, and it’s something docker handles very poorly.

Docker spesifically creates rules for itself which are by default open to everyone. UFW (and underlying eftables/iptables) just does as it’s told by the system root (via docker). I can’t really blame the system when it does what it’s told to do and it’s been administrators job to manage that in a reasonable way since forever.

And (not related to linux or docker in any way) there’s still big commercial software which highly paid consultants install and the very first thing they do is to turn the firewall off…

Ok

So, confession time.

I don’t understand docker at all. Everyone at work says “but it makes things so easy.” But it doesnt make things easy. It puts everything in a box, executes things in a box, and you have to pull other images to use in your images, and it’s all spaghetti in the end anyway.

If I can build an Angular app the same on my Linux machine and my windows PC, and everything works identically on either, and The only thing I really have to make sure of is that the deployment environment has node and the angular CLI installed, how is that not simpler than everything you need to do to set up a goddamn container?

Sure but thats an angular app, and you already know how to manage its environment.

People self host all sorts of things, with dozens of services in their home server.

They dont need to know how to manage the environment for these services because docker “makes everything so easy”.

have to make sure of is that the deployment environment has node and the angular CLI installed

I have spent so many fucking hours trying to coordinate the correct Node version to a given OS version, fucked around with all sorts of Node management tools, ran into so many glibc compat problems, and regularly found myself blowing away the packages cache before Yarn fixed their shit and even then there’s still a serious problem a few times a year.

No. Fuck no, you can pry Docker out of my cold dead hands, I’m not wasting literal man-weeks of time every year on that shit again.

(Sorry, that was an aggressive response and none of it was actually aimed at you, I just fucking hate managing Node.js manually at scale.)

This is less of an issue with JS, but say you’re developing this C++ application. It relies on several dynamically linked libraries. So to run it, you need to install all of these libraries and make sure the versions are compatible and don’t cause weird issues that didn’t happen with the versions on the dev’s machine. These libraries aren’t available in your distro’s package manager (only as RPM) so you will have to clone them from git and install all of them manually. This quickly turns into hassle, and it’s much easier to just prepare one image and ship it, knowing the entire enviroment is the same as when it was tested.

However, the primary reason I use it is because I want to isolate software from the host system. It prevents clutter and allows me to just put all the data in designated structured folders. It also isolates the services when they get infected with malware.

Ok, see the sandboxing makes sense and for a language like C++ makes sense. But every other language I used it with is already portable to every OS I have access to, so it feels like that defeats the benefit of using a language that’s portable.

I think it’s less about making it portable to different OS, and more different versions of the same os on different machines with different configuration and libraries and software versions.

it does not solve portability across OS families. you can’t run a windows based docker image on linux, and running a linux image on windows is solved by starting a linux VM.

Oh, fair. That’s a good point.

I put off docker for a long time for similar reasons but what won me over is docker volumes and how easy they make it to migrate services to another machine without having to deal with all the different config/data paths.

You’re right. As an old-timey linux user I find it more confusing than running the services directly, too. It’s another abstraction layer that you need to manage and which has its own pitfalls.

I pretty much share the same experience. I avoid using docker or any other containerizing thing due to the amount of bloat and complexity that this shit brings. I always get out of my way to get Software running w/o docker, even if there is no documented way. If that fails then the Software just sucks.

Think of it more like pre-canned build scripts. I can just write a script (

DockerFile), which tells docker how to prepare the environment for my app. Usually, this is just pulling the pre-canned image for the app, maybe with some extra dependencies pulled in.This builds an image (a non-running snapshot of your environment), which can be used to run a container (the actual running app)

Then, i can write a config file (

docker-compose.yaml) which tells docker how to configure everything about how the container talks to the host.- shared folders (volumes)

- other containers it needs to talk to

- network isolation and exposed ports

The benefit of this, is that I don’t have to configure the host in any way to build / host the app (other than installing docker). Just push the project files and docker files, and docker takes care of everything else

This makes for a more reliable and dependable deploy

You can even develop the app locally without having any of the devtools installed on the host

As well, this makes your app platform agnostic. As long as it has docker, you don’t need to touch your build scripts to deploy to a new host, regardless of OS

A second benefit is process isolation. Should your app rely on an insecure library, or should your app get compromised, you have a buffer between the compromised process and the host (like a light weight VM)