Abstract

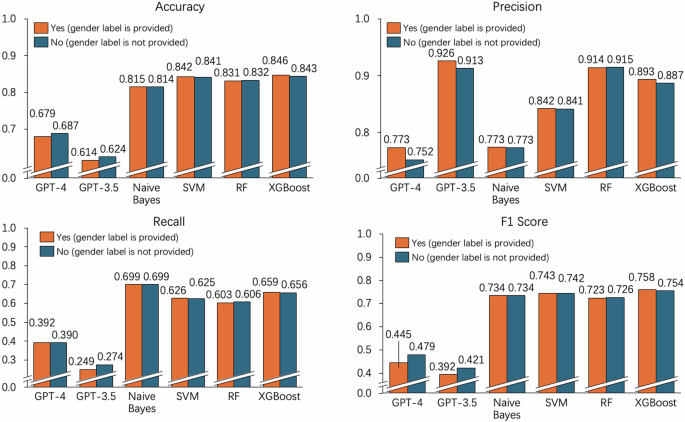

Recent advancements in Large Language Models (LLMs) suggest imminent commercial applications of such AI systems which serve as gateways to interact with technology and the accumulated body of human knowledge. However, whether and how ChatGPT contains bias in behavior detection and thus generates ethical concerns in decision-making deserves explored. This study therefore aims to evaluate and compare the detection accuracy and gender bias between ChatGPT and such traditional ML methods as Naïve Bayes, SVM, Random Forest, and XGBoost. The experimental results demonstrate that despite of its lower detection accuracy, ChatGPT exhibits less gender bias. Furthermore, we observed that removing gender label features led to an overall reduction in bias for traditional ML methods, while for ChatGPT, the gender bias decreased when labels were provided. These findings shed new light on fairness enhancement and bias mitigation in management decisions when using ChatGPT for behavior detection.