YouTube’s rollout of AI tools shows nonsensical AI-generated audience engagement and AI slop thumbnails.

YouTube is AI-generating replies for creators on its platform so they could more easily and quickly respond to comments on their videos, but it appears that these AI-generated replies can be misleading, nonsensical, or weirdly intimate.

YouTube announced that it would start rolling out “editable AI-enhanced reply suggestions” in September, but thanks to a new video uploaded by Clint Basinger, the man behind the popular LazyGameReviews channel, we can now see how they actually work in the wild. For years, YouTube has experimented with auto-generated suggested replies to comments that work much like the suggested replies you might have seen in your Gmail, allowing you to click on one of three suggested responses like “Thanks!” or “I’m on it,” which might be relevant, instead of typing out the response yourself. “Editable AI-enhanced reply suggestions” on YouTube work similarly, but instead of short, simple replies, they offer longer, more involved answers that are “reflective of your unique style and tone.” According Basinger’s video demoing the feature, it does appear the AI-generated replies are trained on his own comments, at times replicating previous comments he made word for word, but many of the suggested replies are strangely personal, wrong, or just plain weird.

For example, last week Basinger posted a short video about a Duke Nukem-branded G Fuel energy drink that comes in powder that needs to be mixed with water. In the video, Basinger makes himself a serving of the drink but can’t find the scoop he’s supposed to use to measure out the formula.

“I wouldn’t be surprised if the scoop was buried in the powder,” one YouTube user commented on the Duke Nukem G Fuel video, which certainly sounds right to me as someone who’s been serving up baby formula for the last year.

YouTube’s AI suggested that Basinger reply to that comment by saying: “It’s not lost, they just haven’t released the scoop yet. It’s coming soon.”

Image: LGR Blerbs/YouTube

I can see how that comment could make sense in the context of the types of other videos LGR publishes, which usually review old games, gadgets, and other tech, but is obviously wrong in this instance.

Another suggested reply to that same comment said: “I’ll have to check if they’re using a proprietary blend that requires a special scoop.”

“My creativity and craft stems completely from my own brain, and handing that off to some machine learning thing that mimics my style not only takes away from the enjoyment of it all for me, but it feels supremely disingenuous,” Basinger told me in an email. “The automated comments in particular come across as tone deaf, since a huge reason YouTube makes sense at all is the communication and relationship between audience and creator. I’ve had dozens of people say that they now second-guess every interaction with YouTubers in the comments since it could easily be a bot, a fake response.”

Another commenter on the Duke Nukem G Fuel video joked that Basinger should have had a tighter grip on the lid as he was shaking the formula to prevent it from flying all over the place.

YouTube’s generative content features in 2024

Basinger bursts out laughing as he reads YouTube’s suggested AI-generated reply to that comment: “I’ve got a whole video on lid safety coming soon, so you don’t have to worry!”

At other times, the AI-suggested replies are just nonsensical. The Duke Nukem G Fuel review wasn’t posted to the main LGR channel, but a channel called LGR Blerbs, which is his naming convention for shorter, less deeply researched videos about whatever he’s interested in. A few commenters said they were happy he was posting to the Blerbs channel again, with one saying “Nice. Back to the blerbs.”

YouTube’s AI suggested Basinger reply to that comment by saying: “It’s a whole new kind of blerp,” which I suppose is funny, but also doesn’t mean anything.

The weirdest examples of AI-generated replies in the video in my opinion are those that attempt to speak to Basinger’s personal life. In response to another commenter who said they were happy Basinger was posting to the Blerbs channel again, YouTube’s AI suggested the following reply: “Yeah, I’m a little burnt out on the super-high-tech stuff so it was refreshing to work on something a little simpler 🙂.” Another AI-generated reply thanked commenters for their patience and said that Basinger was taking a break but was back to making videos now.

YouTuber burnout is a well established problem among YouTube creators, to the point where YouTube itself offers tips on how to avoid it. The job is taxing not only because churning out a lot of videos helps them get picked up by YouTube’s recommendation algorithm, comments on those videos and replies to comments helps increase engagement and visibility for those videos.

YouTube rewarding that type of engagement incentivises the busywork of creators replying to comments, which predictably resulted in an entire practice and set of tools that allow creators to plug their channels to a variety of AIthat will automatically reply to comments for them. YouTube’s AI-enhanced reply suggestions feature just brings that practice of manufactured engagement in-house.

Clearly, Google’s decision to brand the feature as editable AI-enhanced reply suggestions means that it’s not expecting creators to use them as-is. Its announcement calls them “a helpful starting point that you can easily customize to craft your reply to comments.” However, judging by what they look like at the moment, many of the AI-generated replies are too wrong or misleading to be salvageable, which once again shows the limitations of generative AI’s capabilities despite its rapid deployment by the biggest tech companies in the world.

“I would not consider using this feature myself, now or in the future,” Basinger told me. “And I’d especially not use it without disclosing the fact first, which goes for any use of AI or generative content at all in my process. I’d really prefer that YouTube not allow these types of automated replies at all unless there is a flag of some kind beside the comment saying ‘This creator reply was generated by machine learning’ or something like that.”

The feature rollout is also a worrying sign that YouTube could see a rapid descent towards AI-sloppyfication of the type we’ve been documenting on Facebook.

In addition to demoing the AI-enhanced reply suggestion feature, Basinger is also one of the few YouTube creators who now has access to the new YouTube Studio “Inspiration” tab, which YouTube also announced in September. YouTube says this tab is supposed to help creators “curate suggestions that you can mold into fully-fledged projects – all while refining those generated ideas, titles, thumbnails and outlines to match your style.”

Basinger shows how he can write a prompt that immediately AI-generates an idea for a video, including an outline and a thumbnail. The issue in this case is that Basinger’s channel is all about reviewing real, older technology, and the AI will outline videos for products that don’t exist, like a Windows 95 virtual reality headset. Also, the suggested AI-generated thumbnails have all the issues we’ve seen in other AI image generators, like clear misspelling of simple words.

Image: LGR Blerbs/YouTube

“If you’re really having that much trouble coming up with a video idea, maybe making videos isn’t the thing for you,” Basinger said.

Google did not respond to a request for comment.

AI generated video ideas, AI generated thumbnails, AI generated comments from the viewers, AI generated comments from the creators…

I mean, AI already gave me the ick but this is super extra ick.

Youtube is going to be 100% over-run with absolute garbage, and there’s going to be zero way to determine which content is human and not and it’s going to completely make the platform utterly worthless.

It feels like the most urgent things to figure out how to make viable are things like Loops and Peertube, even over 160-character hot-take platforms or link aggregation or whatever, since the audience is SO much larger, and SO much more susceptible to garbage.

It’s all a ploy to generate “engagement” and make more money for Google. They have already been caught once boosting video views to milk the advertisers. Oh no, it wasn’t them! It was some evil hackers using their infrastructure and tools.

Regarding the video platforms, the only way is everyone hosts their own content and distribute via RSS… But then where is the money in it

Regarding the video platforms, the only way is everyone hosts their own content and distribute via RSS… But then where is the money in it

The same place a lot of it is now: patreon, merch, and in-video sponsors.

Sure you lose the Google adsense money, but really, that’s pretty minimal these days after constant payout cuts (see: everyone on youtube complaining about it every 18 months or so) but the bigger pain is reach.

If I post a video on Youtube, it could land in front of a couple of million people either by search, algorithm promotion, or just random fucking chance.

If I post it on my own Peertube instance, it’s in front of uh, well uh, nobody.

That’s probably the harder solution to solve: how can you make a platform/tech stack gain suffient intertia that it’s not just dumping content in a corner and nobody ever seeing it.

there’s going to be zero way to determine which content is human and not

The thing about this prediction is that if it actually does become true, it’ll apply to the content you already enjoy. If it’s content you enjoy, it’s content you enjoy. If not, don’t watch it. I don’t want to see human creators vanish either but I also think AI creators can coexist with them and ultimately, viewers will vote with their views. YouTube isn’t gonna make ad money off videos people aren’t watching, so if AI content is shite, they’ll walk back on it

You’d be happily married to a sexbot, wouldn’t you?

Nah I’d rather marry a human but nice slippery slope

Why doesn’t YouTube make the AIs watch advertisements for me?

Seems much much more useful

Same Reason why Necromancers don’t hire living people,

Blindly consuming Humanoids are much cheaper :D

For the first half part of my life, I was a great technology enthusiast, but from the beginning of the second half until now, I’ve been more and more turning into the opposite…

I know the problem isn’t technology itself, but we can’t separate it from how it’s used by companies and people, so being aware of that doesn’t help.

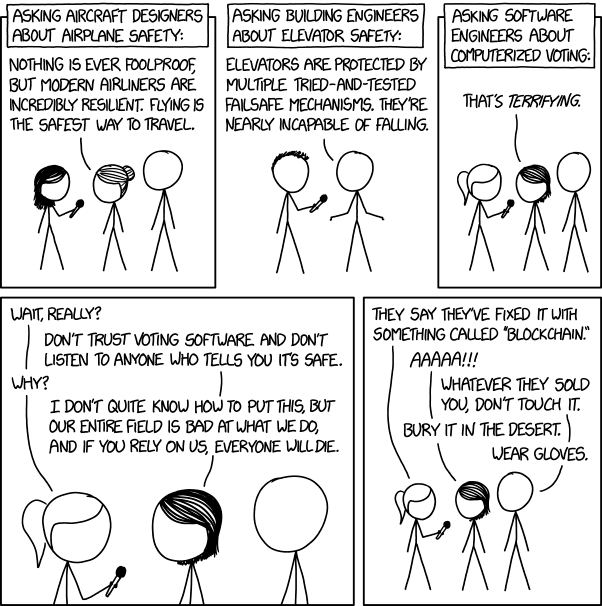

I get the general point, but for the specific example in that comic, electronic voting has been in use in Brazil since the '90s, and there’s basically no fraud connected to it from what I’ve heard/read. And they have the official results in hours, not days.

I like the idea of electonic voting but in Switzerland we vote physically every few months and have the results in hours as well.

^And ^that ^is ^not ^about ^the ^size ^of ^the ^country, ^divide ^and ^conquer…

Fraud isn’t the problem. The vote has to be secret, equal, and free.

You don’t want to be able to find out who voted how. But you need to know who already voted.

Proving Fraud actually happened is difficult with electronic voting to boot.

Tech can’t solve problems humans created.

I think ole Ted wasn’t wrong in some ways

That’s one small step for AI, one giant leap for Dead Internet Theory (Reality?).

The number of things that are blocked on my machine for youtube, be it the UI, the network requests, and some of the content, is ever increasing. Another one on the pile I guess.

This seems very counter productive for the kind of business they run. If everything from videos and thumbnails to comment sections are AI generated, everyone will just start sticking to the people they are subscribed to and never read the comments. All this would do is promote less engagement with the actual platform.

Not just creator tools, the regular comments are showing signs of AI garbage too. I saw one recently where the same comment was effectively repeated with minor variation, ten - twenty times.

To think YT comments could somehow defy the laws of the universe and get worse . . . it’s boggling.

It’s sort of like the false vacuum. YouTube commenters are finding new ways to make the comment section even worse, and it’ll spread and decay to that lower level, until they find another way to be even worse.

it’s interesting that they say their reply suggestions are “AI-enhanced”. what exactly is being enhanced here? or is “AI-enhanced” becoming a euphemism for “AI-generated”?

They are based on the comments the creator has left before instead of being a generic output from an LLM based on the message it is replying. So they are your comments, but with AI “enhancements”, instead of it just suggesting you directly copy-paste some old comment. But at the end of it obviously they are AI generated, that’s how the tech works.

This is horrifying, but the comment about the scoop not being released yet is hilarious in connection to Duke Nukem

Scoop Forever will be released any day now!

I would think detecting and removing spammy and scammy comments would be a higher prior6

And useless comments like: “First” “Who else is watching this in 2024?” “Like if you agree 👇” “Pin my comment”

The worst are things like:

- Bot 1 - I went from broke to making $30k/month once I found this amazing financial advisor.

- Bot 2 - what financial advisor did you use?

- Bot 3 - I used X, Google their name.

- Bot 4 - Oh yeah, X is great, I’ve used them for years!

- Bot 2 - I just talked to X, they’re wonderful.

- …

And so on. They upvote each others’ comments, and since down votes don’t show up anymore, they seem popular.

There’s all kinds of crap like that, and they’re really obvious and probably trivial for AI to detect.

Or put another way, it’s enabling advertisers to better camouflage themselves as humans. (Because we just cannot have people communicating directly to each other on the web…)

I don’t use any of meta’s products so I don’t know what it’s like there, but youtube is getting worse by the day. I’ve stated to reject videos unless the creator is visible from time to time so I can put a face to the voice (or hands to the voice in the case of This Old Tony). And with video gen being released, soon I won’t be able to have any trust in those videos either.

Its getting hard to tell (at least right away) whats been made by people and what’s been made by AI. Just yesterday I was telling my wife, I’m sorry but I cannot fulfill this request. It goes against MaximumDerekAI’s acceptable use policy.

youtube makes their site even worse. what else is new.

Saying the scoop isn’t released yet for a Duke Nukem branded drink powder is pretty funny. Gotta wait 18 years for that scoop, and it’s gonna be the shittiest scoop ever.

I hope GFuel sells the special scoop soon I can’t wait to give company more money 🙏

I seriously can’t wait for youtube to die. I’ve already started uploading videos to peertube instead.

These abusive relationships need to end.