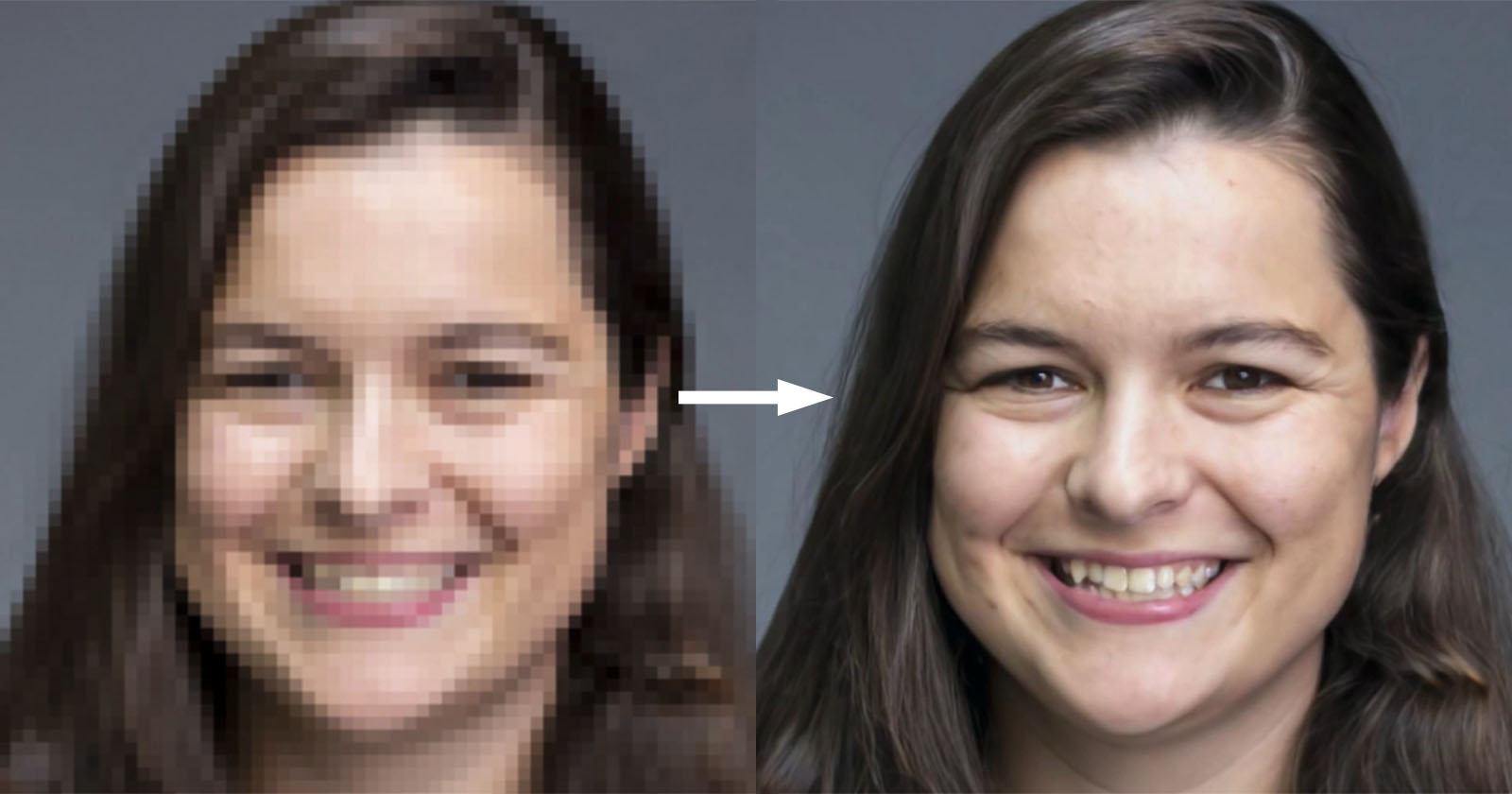

This algorithm creates missing data that fits the original image, but there are many different possibilities that can fit it. This can lead to false accusations if, for example, it is used to find a match in security footage. Here’s an example of the system creating a completely different face that fits the original image https://twitter.com/notlewistbh/status/1432936600745431041

No one is claiming that this should be used to enhance security camera footage though.

I think it’s important to consider it, because it totally will be used for cases like that if allowed

I’m starting to worry that the most popular perspective from which people not familiar with ML can tell loss functions and sampling algorithms apart is through the prism of BLM culture. Although, I agree with your sentiment that there is always a thin end of the wedge.

The cascading diffusion model they use was posted here as well earlier today.