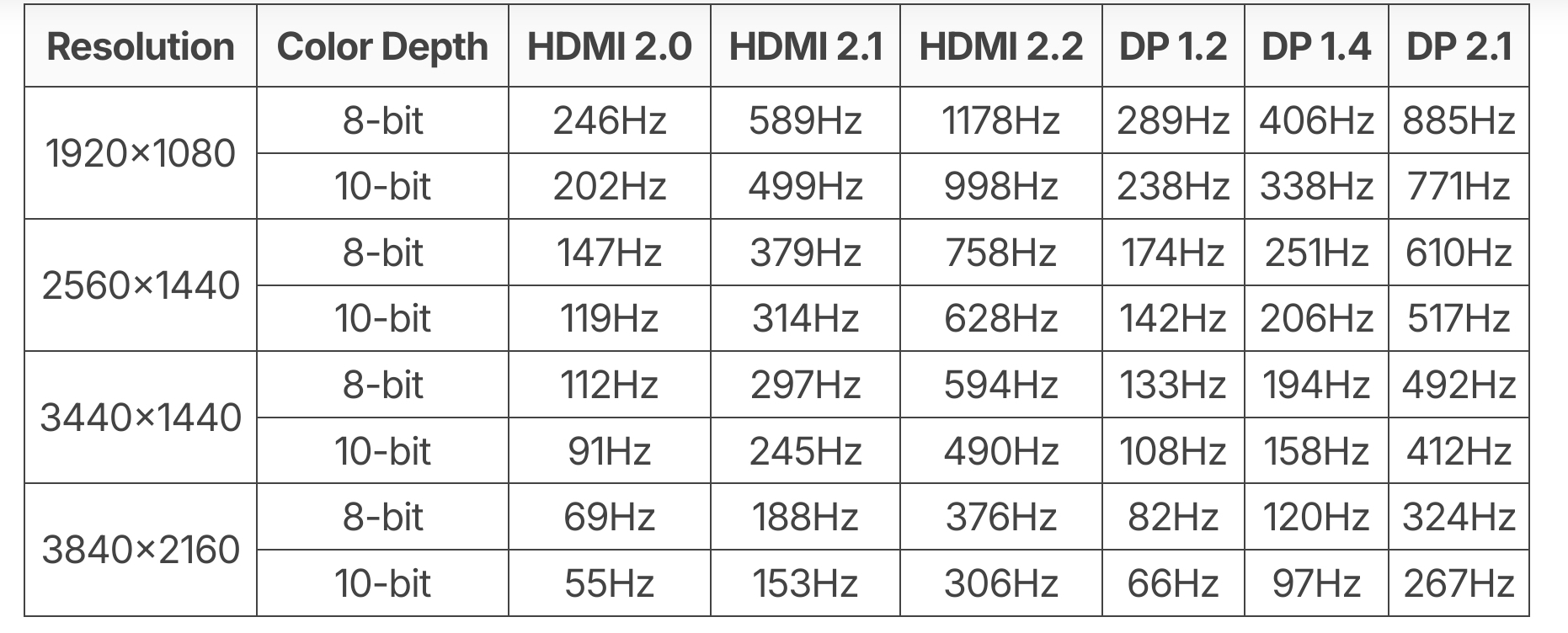

The TL;DR is that the organization that controls the HDMI standard won’t allow any open source implementation of HDMI 2.1.

So the hardware is fully capable of it, but they’ll get in trouble if them officially implement it.

Instead it’s officially HDMI 2 (which maxes out at 4k @ 60Hz), but through a technique called chroma sub-sampling they’ve been able to raise that up to 4k @ 120Hz.

However there are some minor reductions in picture quality because of this, and the whole thing would be much easier if the HDMI forum would be more consumer friendly.

In the meantime, the Steam Machine also has display port as a completely issue free display option.

I have a HDMI splitter, like a 5 input 1 output thing. I have not used it in awhile. Does HDMI pass through the DRM or is the DRM in the splitter?

AMD already spent a significant amount of effort implementing HDMI2.1 in their open driver in such a way that it would be compliment. The suits from HDMI consortium still said No.

https://www.phoronix.com/news/HDMI-2.1-OSS-Rejected

AMD Linux engineers have spent months working with their legal team and evaluating all HDMI features to determine if/how they can be exposed in their open-source driver. AMD had code working internally and then the past few months were waiting on approval from the HDMI Forum… Sadly, the HDMI Forum has turned down AMD’s request for open-source driver support.

AMD Linux engineer Alex Deucher commented on the ticket:

"The HDMI Forum has rejected our proposal unfortunately. At this time an open source HDMI 2.1 implementation is not possible without running afoul of the HDMI Forum requirements."I’m honestly surprised TV OEMs haven’t bothered to at least try throwing in DisplayPort, especially during the period of time it far exceeded the highest possible quality on HDMI.

HDMI is just the last hardware standard created from the ashes of the format wars that has no practical place anymore. It only exists to collect hostage licensing fees.

Tv oems are the ones that set up the hdmi club. They want the content encrypted with drm, from transit, to your pc, to your cable, to your screen. Look up the analog hole. This battle has been going on for 20 years. Share this with interested people.

I don’t know why they’d think I’d capture 600MB/s of uncompressed video though.

Since the torrent sites are crammed with full quality 4k Bluray remuxes and WebDLs direct from Amazon, there’s clearly easier and better ways of doing this than putting encryption in a cable.

Oh, they are working on that too. Win 11 depends on TPM modules, and it’s not by mistake. Once they have the full software and hardware pipeline, they can control the media we see (for profit, but also for authoritarism because the jump is so small).

I love that you’re talking about these issues, but the TPM has nothing to do with any of this. It’s also not a hard requirement for Windows 11 (even though that’s basically all the media was talking about).

Because TV OEMs are the ones in the HDMI consortium.

I hated HMDI when it came out, and I continue to hate it.

And what do you use?

Displayport

Component RCA like god intended

DisplayPort?

I see.

Fuck HDCP.

I tried to stick with DP only, but the tvs with it are getting rarer and much more expensive.

Buy monitors instead. Also saves you the headache of dealing with “smart TV” bullshit.

Hard to find monitors larger than a certain size that aren’t exorbitantly expensive, and I do like a large screen when it comes to Couch Gaming and watching TV (well, streaming video, I ain’t gonna pay for a TV license just to watch the one terrestrial TV show I actually care about)

Do you know of any big dumb TVs or monitors that I could buy in Europe? I only know of Sceptre TVs which are mostly meant for businesses and storefronts but they are extremely hard to get in Europe.

Biggest I found is the Acer Nitro XV275KP3 Gaming Monitor. You kinda pay gaming hardware prices, but given the support for up to 120Hz and HDR10, I was OK with that. It’s mostly used for gaming, anyway.

It used to be that beamers were a way to sidestep the “smart” bullshit, but they started adding that, too. Even the business ones.A 27" monitor will not be anywhere near a replacement for 60" TV, I’m afraid.

That one is 27". I think that’s way too small for the living room. I also consider 120hz to be overkill for some couch gaming and movies.

Do let me know if you find something more suited.

fuck HDMI

all my homies hate HDMI

Why?

You can look up most of the issues with the standard, but TL;DR: DRM, expensive licensing fees, suboptimal performance compared to DisplayPort, and not able to be implemented in FOSS or even OSS systems (because of the shitty DRM which can be circumvented by an AliExpress splitter lmao)

CEC is not standard even though it should be a standard for hdmi.

HDMI needs to die.

I mean the many incarnations of usbc are slowly making headway. For better and worse.

DisplayPort rocks

Yeah fuck the video codec mafia and all these proprietary shits like HDMI

Fun fact, all of the audio codes are proprietary too. You won’t find a HDMI surround sound splitter on Aliexpress. Say no to HDMI, say no to E-ARC.

You know it reminds me of the academic publishing mafia of Elsevier and the like

Both cartels are leeching off often-publicly funded research.

I used to find it took forever to start showing a picture compared to HDMI on my PC. Getting a new GPU so maybe that will improve things.

What display are you using as well? That sounds quite unusual.

If you can find anything to connect it to.

With TVs starting to get USB-C inputs, which are displayport under the hood, hopefully HDMI fucks off.

Ballparking but it will likely take closer to a decade than not for that to actually happen… and I am still not optimistic. And there are actually plenty of reasons to NOT want any kind of bi-directional data transfer between your device and the TV that gets updated to push more and more ads to you every single week.

The reason HDMI is so successful is that the plug itself has not (meaningfully?) changed in closer to 20 years than not. You want to dig out that PS3 and play some Armored Core 4 on the brand new 8k TV you just bought? You can. With no need for extra converters (and that TV will gladly upscale and motion smooth everything…).

Which has added benefits because “enthusiasts” tend to have an AV receiver in between.

The only way USB C becomes a primary for televisions (since display port and usb c are arguably already the joint primary for computer monitors) is if EVERY other device migrates. Otherwise? Your new TV doesn’t work with the PS5 that Jimmy is still using to watch NFL every week.

there are actually plenty of reasons to NOT want any kind of bi-directional data transfer between your device and the TV

I’ve got bad news for you about HDMI then…

USB-C adapters for absolutely everything are thankfully quite common now thanks to the laptop/dock industry.

In the sense that we have dongles/docks, sure. In the sense of monitors with native USB-c input? These are still fairly rare as the accepted pattern is that your dock has an HDMI/DP port and you connect via that (which actually is a very good pattern for laptops).

As for TVs? I am not seeing ANYTHING with usb c in for display. In large part because the vast majority of devices are going to rely on HDMI. As I said above.

I’ll also add that many (most?) of those docks don’t solve this problem. The good ones are configured such that they can pass the handshake information through. I… genuinely don’t know if you can do HDCP over USBC->HDMI as I have never had reason to test it. Regardless, it would require both devices at the end of that chain to be able to resolve the handshakes to enable the right HDMI protocol which gets us back to the exact same problem we started with.

And the less good docks can’t even pass those along. Hence why there is a semi-ongoing search for a good Switch dock among users and so forth.

Regarding the Nintendo Switch, it’s because of their engineered malicious USB-C protocol design that makes the console “Not behave like a good USB citizen should”. It’s less of an issue with the peripherals as a whole.

USB-C probably cannot replace either, because the unmating force is too light. A typical HDMI or DisplayPort cable is much thicker, longer and hence heavier than a typical USB-C cable (even those specced to carry high bandwidth, like a thunderbolt cable) because they need better shielding to carry high bandwidth signals long distances - it’s not unusual to need to route HDMI several metres (but USB-C cables that long are unusual because of the different purposes)

For TVs and such it’s useful to have the inputs connect vertically, so that they don’t stick out the back of the device and cause problems pushing it against a wall. Then the weight of the end of the cable is going to be trying to pull the connector out of the TV. DisplayPort connectors can have a latch to deal with this.

Of course, there a ways around this: a new connector, for example. But it does mean that you can’t just leverage the existing pool of USB-C connectors and cables to make this ubiquitous.

To mention, this is also a problem with HDMI (but not DP).

But just have the usb-c insert top down instead of bottom up, include room for a small loop and cable retention to ensure slack doesnt put pressure on the port. This easily allows for fixed connections with usb-c.

There are also side-screw locking connectors for usb-c. With HDMI, a top-screw option was made for more fixed install scenarios. That design is ugly af and uses a massive amount more room than the usb-c screw lock approach.

Could it be done with a tiny magnet?

Oh, screw lock as in like some PC tower cables? Yes, that would be really nice, I wouldn’t mind that for a phone.

Exactly that.

Its mostly common on commercial devices still, but it is defined.

https://www.usb.org/document-library/usb-type-cr-locking-connector-specification

As an example:

https://www.startech.com/en-us/cables/usb31ccslkv1m

Though again, a lot of this could be mitigated with a loop, strain relief, and inserting from above into a port rather than letting gravity pull it down. But it would be nice to see side screw locks be more common with usb-c.

That usb-c connector with side screw locks looks like a future I want to live in.

It’s futuristic, but it’s open and not corporate. It’s miniaturized and sleek, yet still mechanically rugged.

A good USB c cable and port can hold quite a bit of weight, I’ve easily picked my phone up by it as long as you don’t make any jerking movements. That’s a lot more weight than a few feet of even a very heavily shielded cable.

Then the weight of the end of the cable is going to be trying to pull the connector out of the TV.

Just duck tape the usb cable to the back of the TV

Solvable by moving the locking mechanism out of the port and making one that you can retrofit to any cable

The connectors on the back of the TV can be oriented horizontally (like parallel to the screen, not perpendicular), which at least changes the pull force to a torque force, which isn’t ideal but easier to hold on to.

governments should start cracking down on codecs. tf are dipshits allowed to hold standards hostage?

They really just need to demand that open formats are implemented in parallel with any proprietary ones, with no artificial feature/performance disparity allowed.

That kills any incentive to keep the proprietary ones locked down because eventually the open formats will be available throughout the ecosystem and users will have devices with support in the entire pipeline. Then users will simply no longer want to deal with the locked down formats for long and nobody will want to sell them.

Proprietary formats should be illegal. Consumers are idiots, marketing will convince them to support proprietary, and regulatory capture will compromise any attempt to stop disparity

It pisses me off that you gotta pay so much money to look at the official ISO 8601.

Dated joke

At this point just make an “adapter” that captures the disaply port signal and outputs it from a “supported” device

At this point stop using HDMI

. And players that want to avoid the issue can use the Steam Machine’s DisplayPort 1.4 output, which supports even more bandwidth than HDMI 2.1 (and which can be converted to an HDMI signal with a simple dongle).

So, ship with a dongle.

Doesn’t the system driver need to support the standard?

That’s what I’m saying, it would be more complicated than a dongle, the PS5 has some sorta system that handles this, it would essientally be a device that supports it, that just decodes and encodes the video feed, as dumb as this sounds it’d the only soluations to use on most TVs

Is this why DisplayPort looks better for me on Linux???

Yes. DP is the right choice for civilized people.

Yep it’s pretty much better in all regards.

The only downside is no ARC support, but I suppose support for that is pretty hit or miss anyway.

Honestly arc is a great idea that never seems to work for me. I’ll always be RIGHT there, but my Blu-ray player turns on randomly when I’m doing something else, or something like that. So I end up turning it off.

I really wish I could find a TV within my desired specs that had DisplayPort. We will buy a Steam Machine to use it in place of our docked Steam Deck in the living room, so being able to use DP would be amazing.

Adapting DisplayPort to HDMI with minimal quality loss is child’s play. It’s the other way around that’s misery.

Any cheap adapter cable that supports DisplayPort In to HDMI Out should be perfectly fine.

I just realized I have such cable in my desk (brand new), DP to HDMI 4k 60fps

My spouse need something for the other way around for his desk setup

Those specs sound like HDMI 2 anyway. HDMI 2.1 can do 4K @ 144Hz with HDR. Or apparently even 10K @ 120Hz.

But the big thing with HDMI 2.1 is the cec protocol which doesn’t translate over an adapter unfortunately. But it is a very tiny thing most people won’t care about.

Would be cool if they put one in the box. Would save many sad christmas days as you wait for Amazon to come round with an adapter.

i want to say you can buy an adapter to get dp 1.4 out of steam machine and into hdmi 2.1 on a tv and should be fine. just has to be a powered adapter i believe

The powered adapters are for the other way around. DP has support for HDMI out without additional components, but ofc the HDMI forum makes converting HDMI to DP like pulling teeth.

Ship a high quality DP adapter with each onr 😂

The communication protocols are just different enough that an adapter won’t work.

The adapters have circuity inside, they exist. It’s small enough to still just look like a cable because they fit the chip in a plug end.

Til

What a bummer. I guess TIL that i, in fact, don’t use one literally every single day.

Is it 2.1? Does it transmit cec commands? No? Strange…

It’s almost like we are commenting under a post that is saying exactly that.

I’m an idiot and i apologize.

¡Yo tambien!

DP has an option to transmit HDMI signals instead, this is what passive adapters use and will still have the same HDMI 2.0 issue. A DP source can be passively adapted to HDMI, but a HDMI source cannot be passively adapted to DP.

You can also get active HDMI adapters which actively convert the signal, and can work with HDMI 2.1. Intel actually has an active converter chip built into their ARC GPUs, and is how they get around this issue.

Countdown until some community member patches 2.1 support in

Not that easy.

To get HDMI 2.1 support for the Gabe Cube itself essentially requires kernel level patches. Which on a “normal” Linux device is possible (but ill advised) but on these atomic distros where even something like syncthing involves shenanigans to keep active week to week? Ain’t happening. Because HDMI is not just mapping data to pins and using the right codecs. There are a LOT of handshakes involved along the way (which is also the basis for HDCP which essentially all commercial streaming services utilize to some degree).

There ARE methods (that I have personally used) to take a DP->HDMI dongle and flash a super sketchy Chinese (the best source for sketchy tech) firmware to effectively cheat the handshakes. It isn’t true HDMI 2.1 but it provides VRR and “good enough for 2025” HDR at 4k/120Hz. But… I would wager money that is violating at least one law or another.

So expect a lot of those “This ini change fixes all of Windows 11. Just give money to my patreon for it” level fixes. And… idiots will believe it since you can use a dongle to already get like HDMI 2.05 or whatever with no extra effort. And there will likely be a LOT of super sketchy dongles on AliExpress that come pre-flashed that get people up to 2.09 (which is genuinely good enough for most people). But it is gonna be a cluster.

And that is why all of us with AMD NUCs already knew what a clusterfuck this was going to be.

There are also ways to fake the handshake in software. I personally did not try that but from what I have seen on message boards? It is VERY temporary (potentially having to redo every single time you change inputs on your TV/receiver) and it is unclear if the folk who think it works actually tested anything or just said “My script printed out ‘Handshake Successful’, it works with this game that doesn’t even output HDR!”

on these atomic distros where even something like syncthing involves shenanigans to keep active week to week? Ain’t happening.

I don’t see why you couldn’t kexec into a new kernel. kexec will load a kernel into memory from an already running kernel, and jump into it. It’ll suck for the user as they’ll have to semi-reboot everytime they want HDMI 2.1, but it’s easy and doesn’t install anything.

There’s also live patching, but I think that’ll be a bit of work.

Of course the kernel needs to be compiled with those options enabled, but most distros do.

Edit: And they probably won’t work with kernel lockdown/secure boot.

Cable Matters sells plenty of different DP->HDMI 2.1 adapters that work with VRR. The main issue here is that you won’t get CEC if you use those.

I have one of these, but I don’t believe they work with HDMI 2.1 VRR (or at least I’ve never been able to get it to work).

With a LG B9, I have both G-Sync and HDMI 2.1 VRR support, but no FreeSync. I can get most of the HDMI 2.1 feature set working with a DP->HDMI 2.1 adapter, except for any form of VRR. That’s with a RX 9070, but similar situation on a 6800 XT previously.Yeah. If you just want HDR, Cable Matters is the way to go.

If you buy one and flash it with a sketchy firmware, you can get VRR. But my understanding is the HDR is a smaller range. How much that matters when the vast majority of games aren’t taking advantage of HDR is up to you.

Personally? I love how much HDR pops. I more or less need VRR to not have to care about frame tearing and the like. And when I am running games on an underpowered glorified AMD NUC (or, in my case, ACTUALLY an AMD NUC)… my framerates are neither high nor stable.

I care about HDR (on Linux) way less than I do VRR in general.

Is that the “sketchy” firmware you’re referring to?I’m explicitly not going to link to it as I can’t personally vet how safe it is or its origins but:

No. Check the various issues related to the subject matter. And hobbyist threads on sites like resetera.

a super sketchy Chinese (the best source for sketchy tech)

Russian is also good

Ukrainians had a reputation for being the best source for cracks for the DRM on farming and construction equipment that prevented third-party repairs and modifications.

(There’s a reason farmers are one of the biggest groups pushing for Right to Repair.)

Ooh true

If would need to be patched in on Linux kernel level, which is annoying to say the least.

Pfft. People using monitors or tvs. I just plug into it and play it in my head.

That’s what the Steam Frame is for.

Better Than Life™